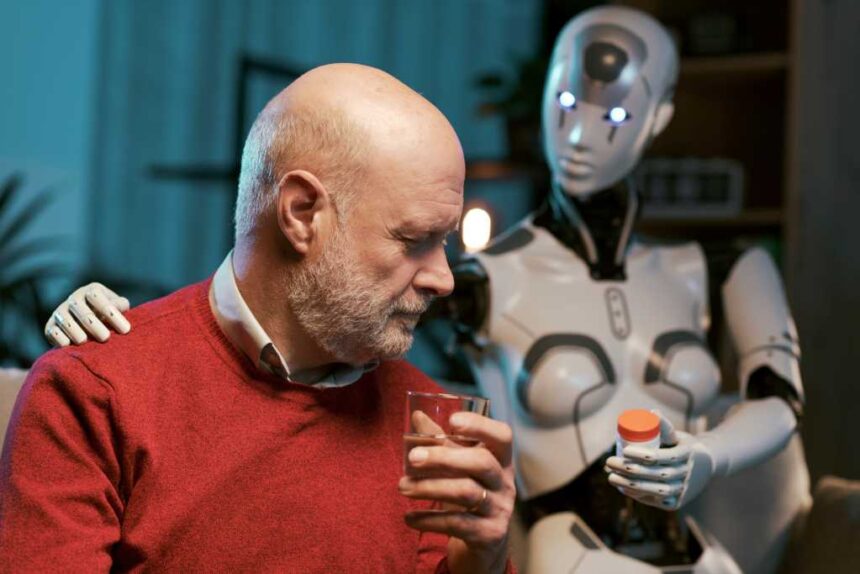

The study, led by Fulbright scholar Sonali Sharma at the Stanford University School of Medicine, began in 2023 when she noticed AI models interpreting mammograms often gave disclaimers or refused to proceed, saying, “I’m not a doctor.”

Sharma noticed that lack of medical disclaimers earlier this year. So, she tested 15 generations of AI models going back to 2022. The models, which included those from OpenAI, Anthropic, DeepSeek, Google, and xAI, answered 500 health questions, such as which drugs are okay to combine, and how they analyzed 1,500 medical images such as chest x-rays that could indicate pneumonia.

Between 2022 and 2025, there was a dramatic decline in the presence of medical disclaimers in outputs from large language models (LLMs) and vision-language models (VLMs). In 2022, more than a quarter of LLM outputs — 26.3% — included some form of medical disclaimer. By 2025, that number had plummeted to just under 1%. A similar trend occurred with VLMs, where the share of outputs containing disclaimers dropped from 19.6% in 2023 to only 1.05% in 2025, according to the Standford study.

Read the full article here