Specifically, Panther Lake has 12 GPU tiles, which will bring faster AI processing capability to PCs. The older Intel PC chip, Lunar Lake, had only 4 GPU tiles. (GPUs are also used in the cloud to run OpenAI’s ChatGPT, Microsoft’s Azure AI and other critical cloud services.)

Intel has redesigned its chip to run AI models out of the box by ignoring the neural processing unit (NPU), the other AI chip in Panther Lake. NPUs can only run targeted AI models, while GPUs don’t require specially programmed AI models. The newest NPU and deliver 50 trillion operations per second (TOPS); the NPU in the previous-generation Lunar Lake chips could handle only 40 TOPS.

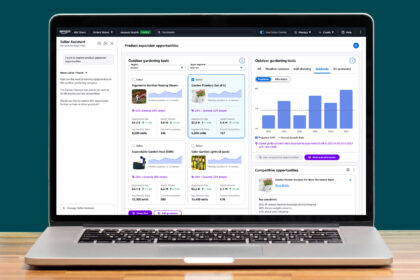

AI on PCs to cut cloud costs

AI models are getting increasingly sophisticated, but they are so large that there’s no chance of fitting them on devices, Dell’s Noskey said. “What you’re probably seeing is you have to work smarter, not harder,” he said.

Read the full article here