If you’re familiar with my views on anthropomorphicization (i.e., “humanizing”) of robots and AI, you might guess what I’m about to say. Amazon says its Vulcan robot can feel (without quotation marks). This isn’t true.

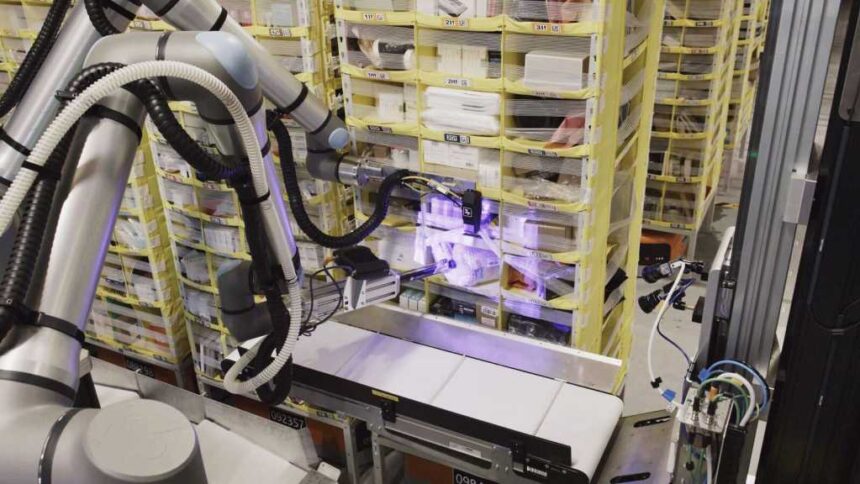

When a robot like Vulcan “feels” something, it uses sensors that measure force, pressure, and sometimes texture or shape, turning these signals into data that AI can interpret. Vulcan’s sensors are built into its gripper and joints, so when it touches or grasps an object, it detects how much force it’s applying and the contours it’s encountering. Machine learning algorithms then help Vulcan decide how to adjust its grip or movement based on this feedback.

By contrast, a person feels with a network of millions of nerve endings in the skin, especially in the fingertips. These nerves send detailed, real-time information to the brain about pressure, temperature, texture, pain, and even the direction of force. The human sense of touch is deeply connected to memory, emotion, judgment, and consciousness.

Read the full article here