- Search documentation.

- Execute autonomous tasks.

- Explore file structures, understand how the pieces connect, and spot necessary changes before writing code.

- Update project settings.

- Verify work visually by capturing Xcode Previews and iterating through builds and fixes — even capturing screenshots to show code functions properly.

Developers can also combine all these features, using AI to vibe code apps, build images, develop file structures and verify app behavior, iterating on the app. This lets them focus on improving the overall experience of the code.

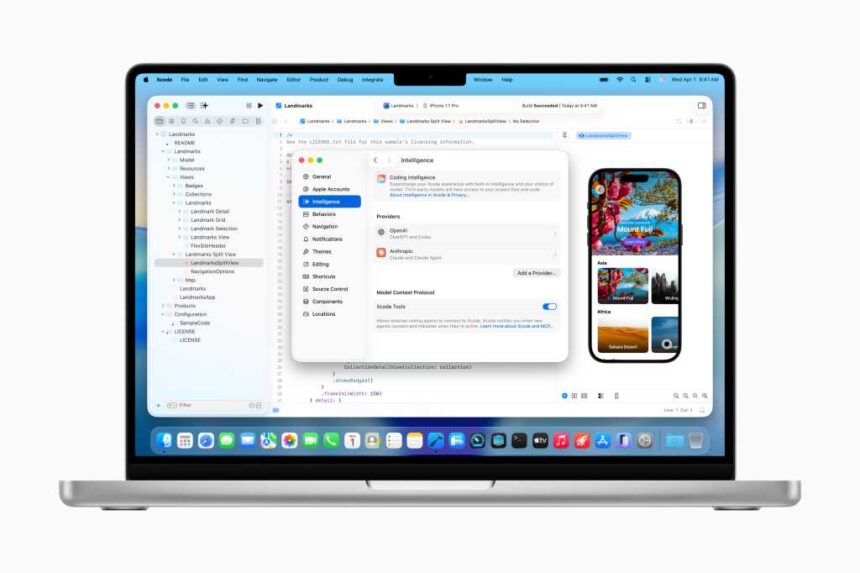

Finally, the introduction of Model Context Protocol delivers much more than the press statement explains: as long as the IDE is running, users can browse and search Xcode project structure, read/write/delete files and groups, build projects (including structure and build logs), run fault diagnostics, execute tasks and more, using their choice of MCP-supporting agent models.

What comes next?

There are some risks coming into view. Vibe coding at scale will happen, and when it does it will introduce a flotilla of rapidly-created apps, some of which might include security flaws if not verified and checked correctly. That’s even before you consider the tendency of large language models (LLMs) to hallucinate.

Read the full article here