Every step of the way, AGI could interpret complex, ambiguous data, recognizing patterns and anomalies that humans or traditional AIs might miss. For example, it could refine existing models by first noticing, then removing or updating false, inaccurate, or biased data used in large language models. This paves the way to self-improvement, where AGI agents could learn how to be better scientists, improving their own methods and tools.

It could also engage in cross-lab collaboration, where AGI agents could find other researchers (or research agents) working on similar projects and connect with them to share data, avoid duplication, and develop insights faster.

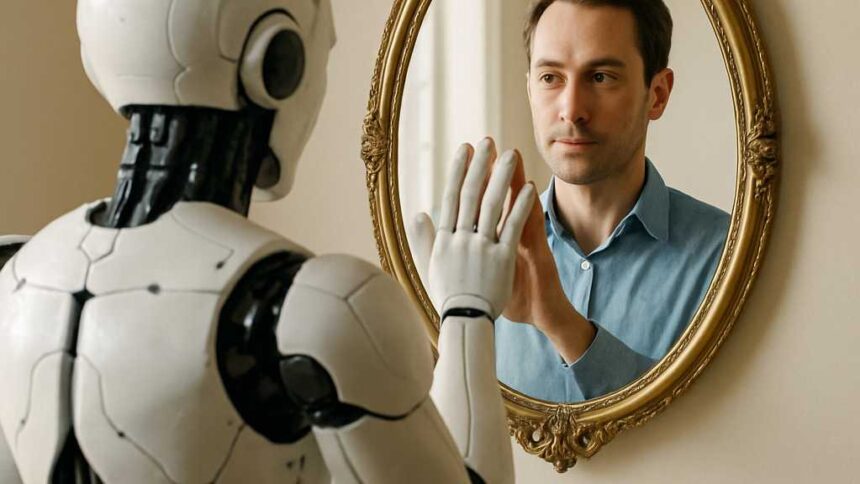

The aspects of AGI that are the most promising — acting independently, improving itself, and cooperating with other AI agents — are also the most alarming. Just recently, OpenAI models not only refused to shut down when researchers told them to, they actively sabotaged the scripts trying to shut them down. And researchers in China found that models from OpenAI, Anthropic, Meta, DeepSeek, and Alibaba all showed self-preservation behaviors including blackmail, sabotage, self-replication, and escaping containment.

Read the full article here